搜索到

11

篇与

Docker

的结果

-

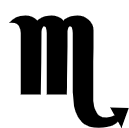

![使用 docker 快速安装 Home Assistant]() 使用 docker 快速安装 Home Assistant 之前我们有介绍过 Home Assistant 。我们安装 Home Assistant 最简单的方式是使用 docker,一个命令搞定:docker run -d \ --name=home_assistant \ -e TZ="Asia/Shanghai" \ -v hass_config:/config \ -v /dev/bus/usb:/dev/bus/usb \ -v /var/run/dbus:/var/run/dbus \ --net=host \ --privileged \ --restart unless-stopped \ homeassistant/home-assistant:stable安装好之后,访问 http://[IP]:8123 就能看到界面啦。

使用 docker 快速安装 Home Assistant 之前我们有介绍过 Home Assistant 。我们安装 Home Assistant 最简单的方式是使用 docker,一个命令搞定:docker run -d \ --name=home_assistant \ -e TZ="Asia/Shanghai" \ -v hass_config:/config \ -v /dev/bus/usb:/dev/bus/usb \ -v /var/run/dbus:/var/run/dbus \ --net=host \ --privileged \ --restart unless-stopped \ homeassistant/home-assistant:stable安装好之后,访问 http://[IP]:8123 就能看到界面啦。 -

Linux查看磁盘空间使用状态以及docker空间清理 查看Linux系统的文件系统使用情况 df -h 查询各个目录或者文件占用空间的情况 du -sh *|sort -h du -h --max-depth=1 查看docker磁盘使用情况 du -hs /var/lib/docker/ 查看Docker的磁盘使用情况 docker system df 清理磁盘,删除关闭的容器、无用的数据卷和网络,以及dangling镜像(即无tag的镜像) docker system prune 清理得更加彻底,可以将没有容器使用Docker镜像都删掉。注意,这两个命令会把你暂时关闭的容器,以及暂时没有用到的Docker镜像都删掉 docker system prune -a 清理容器日志 docker inspect <容器名> | grep LogPath | cut -d ':' -f 2 | cut -d ',' -f 1 | xargs echo | xargs truncate -s 0 Job #!/bin/sh ls -lh $(find /var/lib/docker/containers/ -name *-json.log) echo "==================== start clean docker containers logs ==========================" logs=$(find /var/lib/docker/containers/ -name *-json.log) for log in $logs do echo "clean logs : $log" cat /dev/null > $log done echo "==================== end clean docker containers logs ==========================" ls -lh $(find /var/lib/docker/containers/ -name *-json.log) 限制Docker日志大小配置# 编辑docker配置文件 nano /etc/docker/daemon.json # 加入如下配置,限制每个容器最大日志大小50M,最大文件数1 { "log-driver":"json-file", "log-opts": {"max-size":"50m", "max-file":"1"} } # 重启docker服务 # 查看overlayer2对应容器 ```bash for container in $(docker ps --all --quiet --format '{{ .Names }}'); do echo "$(docker inspect $container --format '{{.GraphDriver.Data.MergedDir }}' | \ grep -Po '^.+?(?=/merged)' ) = $container" done ``` systemctl daemon-reload systemctl restart docker

-

Docker部署ngrok反向代理 dhso/ngrok Another ngrok client by python. start ngrokd servicedocker run -d \ --name ngrokd \ --net=host \ --restart=always \ sequenceiq/ngrokd:latest \ -httpAddr=:4480 \ -httpsAddr=:4444 \ -domain=xxx.com Please remember to modify your domain name resolution A | *.xxx.com | xxx.xxx.xxx.xxx run ngrok clientdocker run -d \ --name ngrok \ --net=host \ --restart=always \ -e NGROK_HOST=xxx.com|xxx.xxx.xxx.xxx \ -e NGROK_PORT=4443 \ -e NGROK_BUFSIZE=8192 \ -v ngrok_app:/app \ dhso/ngrok:latest config ENV VAL NGROK_HOST your ngrokd domain or IP NGROK_PORT default 4443 NGROK_BUFSIZE default 8192 in ngrok container cd /app edit ngrok.json save ngrok.json and restart ngrok container ngrok.json example[{ "protocol": "http", "hostname": "www.xxx.com", "subdomain": "", "rport": 0, "lhost": "127.0.0.1", "lport": 80 },{ "protocol": "http", "hostname": "", "subdomain": "www", "rport": 0, "lhost": "127.0.0.1", "lport": 80 },{ "protocol": "tcp", "hostname": "", "subdomain": "", "rport": 2222, "lhost": "127.0.0.1", "lport": 22 }] Hub地址 https://hub.docker.com/r/dhso/ngrok Github地址 https://github.com/dhso/ngrok-python

-

Docker Swarm需在iptables放行的端口 #TCP端口2376 用于安全的Docker客户端通信iptables -I INPUT -p tcp --dport 2376 -j ACCEPT #TCP端口2377 集群管理端口,只需要在管理器节点上打开 iptables -I INPUT -p tcp --dport 2377 -j ACCEPT #TCP与UDP端口7946 节点之间通讯端口(容器网络发现) iptables -I INPUT -p tcp --dport 7946 -j ACCEPT iptables -I INPUT -p udp --dport 7946 -j ACCEPT #UDP端口4789 overlay网络通讯端口(容器入口网络) iptables -I INPUT -p udp --dport 4789 -j ACCEPT #portainer 的endpoint端口 iptables -I INPUT -p tcp --dport 9001 -j ACCEPT

-

Docker registry and npm registry docker:docker-proxy-163: https://hub-mirror.c.163.com docker-proxy-dockerhub: https://registry-1.docker.io docker-proxy-ustc: https://docker.mirrors.ustc.edu.cn npm:npm-proxy-cnpm: https://registry.npm.taobao.org npm-proxy-npmjs: https://registry.npmjs.org .npmrc:registry=https://registry.npm.taobao.org sass_binary_site=http://npm.taobao.org/mirrors/node-sass electron_mirror=http://npm.taobao.org/mirrors/electron/

-

![Docker部署JupyterHub并开启Lab跟Github授权]() Docker部署JupyterHub并开启Lab跟Github授权 本文介绍了如何使用Docker来运行JupyterHub,并使用Github来授权登录,登录后JupyterHub会创建单用户的docker容器,并自定义用户docker镜像开启Lab功能。拉取相关镜像docker pull jupyterhub/jupyterhub docker pull jupyterhub/singleuser:0.9 创建jupyterhub_network网络docker network create --driver bridge jupyterhub_network 创建jupyterhub的volumesudo mkdir -pv /data/jupyterhub sudo chown -R root /data/jupyterhub sudo chmod -R 777 /data/jupyterhub 复制jupyterhub_config.py到volumecp jupyterhub_config.py /data/jupyterhub/jupyterhub_config.py jupyterhub_config.py# Configuration file for Jupyter Hub c = get_config() # spawn with Docker c.JupyterHub.spawner_class = 'dockerspawner.DockerSpawner' # Spawn containers from this image c.DockerSpawner.image = 'dhso/jupyter_lab_singleuser:latest' # JupyterHub requires a single-user instance of the Notebook server, so we # default to using the `start-singleuser.sh` script included in the # jupyter/docker-stacks *-notebook images as the Docker run command when # spawning containers. Optionally, you can override the Docker run command # using the DOCKER_SPAWN_CMD environment variable. c.DockerSpawner.extra_create_kwargs.update({ 'command': "start-singleuser.sh --SingleUserNotebookApp.default_url=/lab" }) # Connect containers to this Docker network network_name = 'jupyterhub_network' c.DockerSpawner.use_internal_ip = True c.DockerSpawner.network_name = network_name # Pass the network name as argument to spawned containers c.DockerSpawner.extra_host_config = { 'network_mode': network_name } # Explicitly set notebook directory because we'll be mounting a host volume to # it. Most jupyter/docker-stacks *-notebook images run the Notebook server as # user `jovyan`, and set the notebook directory to `/home/jovyan/work`. # We follow the same convention. notebook_dir = '/home/jovyan/work' c.DockerSpawner.notebook_dir = notebook_dir # Mount the real user's Docker volume on the host to the notebook user's # notebook directory in the container c.DockerSpawner.volumes = { 'jupyterhub-user-{username}': notebook_dir } # volume_driver is no longer a keyword argument to create_container() # c.DockerSpawner.extra_create_kwargs.update({ 'volume_driver': 'local' }) # Remove containers once they are stopped c.DockerSpawner.remove_containers = True # For debugging arguments passed to spawned containers c.DockerSpawner.debug = True # The docker instances need access to the Hub, so the default loopback port doesn't work: # from jupyter_client.localinterfaces import public_ips # c.JupyterHub.hub_ip = public_ips()[0] c.JupyterHub.hub_ip = 'jupyterhub' # IP Configurations c.JupyterHub.ip = '0.0.0.0' c.JupyterHub.port = 80 # OAuth with GitHub c.JupyterHub.authenticator_class = 'oauthenticator.GitHubOAuthenticator' c.Authenticator.whitelist = whitelist = set() c.Authenticator.admin_users = admin = set() import os os.environ['GITHUB_CLIENT_ID'] = '你自己的GITHUB_CLIENT_ID' os.environ['GITHUB_CLIENT_SECRET'] = '你自己的GITHUB_CLIENT_SECRET' os.environ['OAUTH_CALLBACK_URL'] = '你自己的OAUTH_CALLBACK_URL,类似于http://xxx/hub/oauth_callback' join = os.path.join here = os.path.dirname(__file__) with open(join(here, 'userlist')) as f: for line in f: if not line: continue parts = line.split() name = parts[0] whitelist.add(name) if len(parts) > 1 and parts[1] == 'admin': admin.add(name) c.GitHubOAuthenticator.oauth_callback_url = os.environ['OAUTH_CALLBACK_URL'] 复制userlist到volume,userlist存储了用户名以及权限cp userlist /data/jupyterhub/userlist dhso admin wengel 编译dockerfiledocker build -t dhso/jupyterhub . DockerfileARG BASE_IMAGE=jupyterhub/jupyterhub FROM ${BASE_IMAGE} RUN pip install --no-cache --upgrade jupyter RUN pip install --no-cache dockerspawner RUN pip install --no-cache oauthenticator EXPOSE 80 编译单用户jupyter的dockerfile,并开启labdocker build -t dhso/jupyter_lab_singleuser . DockerfileARG BASE_IMAGE=jupyterhub/singleuser FROM ${BASE_IMAGE} # 加速 # RUN conda config --add channels https://mirrors.tuna.tsinghua.edu.cn/anaconda/pkgs/free/ # RUN conda config --add channels https://mirrors.tuna.tsinghua.edu.cn/anaconda/pkgs/main/ # RUN conda config --set show_channel_urls yes # Install jupyterlab # RUN conda install -c conda-forge jupyterlab RUN pip install jupyterlab RUN jupyter serverextension enable --py jupyterlab --sys-prefix USER jovyan 创建jupyterhub的docker容器,映射80端口docker run -d --name jupyterhub -p 80:80 \ --network jupyterhub_network \ -v /var/run/docker.sock:/var/run/docker.sock \ -v /data/jupyterhub:/srv/jupyterhub dhso/jupyterhub:latest 访问localhost就能看到界面

Docker部署JupyterHub并开启Lab跟Github授权 本文介绍了如何使用Docker来运行JupyterHub,并使用Github来授权登录,登录后JupyterHub会创建单用户的docker容器,并自定义用户docker镜像开启Lab功能。拉取相关镜像docker pull jupyterhub/jupyterhub docker pull jupyterhub/singleuser:0.9 创建jupyterhub_network网络docker network create --driver bridge jupyterhub_network 创建jupyterhub的volumesudo mkdir -pv /data/jupyterhub sudo chown -R root /data/jupyterhub sudo chmod -R 777 /data/jupyterhub 复制jupyterhub_config.py到volumecp jupyterhub_config.py /data/jupyterhub/jupyterhub_config.py jupyterhub_config.py# Configuration file for Jupyter Hub c = get_config() # spawn with Docker c.JupyterHub.spawner_class = 'dockerspawner.DockerSpawner' # Spawn containers from this image c.DockerSpawner.image = 'dhso/jupyter_lab_singleuser:latest' # JupyterHub requires a single-user instance of the Notebook server, so we # default to using the `start-singleuser.sh` script included in the # jupyter/docker-stacks *-notebook images as the Docker run command when # spawning containers. Optionally, you can override the Docker run command # using the DOCKER_SPAWN_CMD environment variable. c.DockerSpawner.extra_create_kwargs.update({ 'command': "start-singleuser.sh --SingleUserNotebookApp.default_url=/lab" }) # Connect containers to this Docker network network_name = 'jupyterhub_network' c.DockerSpawner.use_internal_ip = True c.DockerSpawner.network_name = network_name # Pass the network name as argument to spawned containers c.DockerSpawner.extra_host_config = { 'network_mode': network_name } # Explicitly set notebook directory because we'll be mounting a host volume to # it. Most jupyter/docker-stacks *-notebook images run the Notebook server as # user `jovyan`, and set the notebook directory to `/home/jovyan/work`. # We follow the same convention. notebook_dir = '/home/jovyan/work' c.DockerSpawner.notebook_dir = notebook_dir # Mount the real user's Docker volume on the host to the notebook user's # notebook directory in the container c.DockerSpawner.volumes = { 'jupyterhub-user-{username}': notebook_dir } # volume_driver is no longer a keyword argument to create_container() # c.DockerSpawner.extra_create_kwargs.update({ 'volume_driver': 'local' }) # Remove containers once they are stopped c.DockerSpawner.remove_containers = True # For debugging arguments passed to spawned containers c.DockerSpawner.debug = True # The docker instances need access to the Hub, so the default loopback port doesn't work: # from jupyter_client.localinterfaces import public_ips # c.JupyterHub.hub_ip = public_ips()[0] c.JupyterHub.hub_ip = 'jupyterhub' # IP Configurations c.JupyterHub.ip = '0.0.0.0' c.JupyterHub.port = 80 # OAuth with GitHub c.JupyterHub.authenticator_class = 'oauthenticator.GitHubOAuthenticator' c.Authenticator.whitelist = whitelist = set() c.Authenticator.admin_users = admin = set() import os os.environ['GITHUB_CLIENT_ID'] = '你自己的GITHUB_CLIENT_ID' os.environ['GITHUB_CLIENT_SECRET'] = '你自己的GITHUB_CLIENT_SECRET' os.environ['OAUTH_CALLBACK_URL'] = '你自己的OAUTH_CALLBACK_URL,类似于http://xxx/hub/oauth_callback' join = os.path.join here = os.path.dirname(__file__) with open(join(here, 'userlist')) as f: for line in f: if not line: continue parts = line.split() name = parts[0] whitelist.add(name) if len(parts) > 1 and parts[1] == 'admin': admin.add(name) c.GitHubOAuthenticator.oauth_callback_url = os.environ['OAUTH_CALLBACK_URL'] 复制userlist到volume,userlist存储了用户名以及权限cp userlist /data/jupyterhub/userlist dhso admin wengel 编译dockerfiledocker build -t dhso/jupyterhub . DockerfileARG BASE_IMAGE=jupyterhub/jupyterhub FROM ${BASE_IMAGE} RUN pip install --no-cache --upgrade jupyter RUN pip install --no-cache dockerspawner RUN pip install --no-cache oauthenticator EXPOSE 80 编译单用户jupyter的dockerfile,并开启labdocker build -t dhso/jupyter_lab_singleuser . DockerfileARG BASE_IMAGE=jupyterhub/singleuser FROM ${BASE_IMAGE} # 加速 # RUN conda config --add channels https://mirrors.tuna.tsinghua.edu.cn/anaconda/pkgs/free/ # RUN conda config --add channels https://mirrors.tuna.tsinghua.edu.cn/anaconda/pkgs/main/ # RUN conda config --set show_channel_urls yes # Install jupyterlab # RUN conda install -c conda-forge jupyterlab RUN pip install jupyterlab RUN jupyter serverextension enable --py jupyterlab --sys-prefix USER jovyan 创建jupyterhub的docker容器,映射80端口docker run -d --name jupyterhub -p 80:80 \ --network jupyterhub_network \ -v /var/run/docker.sock:/var/run/docker.sock \ -v /data/jupyterhub:/srv/jupyterhub dhso/jupyterhub:latest 访问localhost就能看到界面 -

swarm 安装小记 ssh root@40.73.96.111ssh root@40.73.99.31 ssh root@40.73.96.219 docker swarm join --token SWMTKN-1-2g1m3acikt9jfj1mnhyfqyta2e4w58we0lapdyri8i8aec3ndz-e1pztefxdo6nxu85n493y2g5p 172.16.5.5:2377 ### docker ### yum remove docker docker-client docker-client-latest docker-common \ docker-latest docker-latest-logrotate docker-logrotate \ docker-selinux docker-engine-selinux docker-engine yum install -y yum-utils device-mapper-persistent-data lvm2 yum-config-manager --add-repo https://download.docker.com/linux/centos/docker-ce.repo yum install docker-ce systemctl start docker systemctl enable docker nano /etc/docker/daemon.json { "registry-mirrors": ["https://registry.docker-cn.com"], "insecure-registries":["172.16.5.5:9060"] } systemctl daemon-reload systemctl restart docker.service ### swarm ### 初始化swarm manager并制定网卡地址 docker swarm init --advertise-addr 192.168.10.117 强制删除集群,如果是manager,需要加–force docker swarm leave --force docker node rm docker-118 查看swarm worker的连接令牌 docker swarm join-token worker 查看swarm manager的连接令牌 docker swarm join-token manager 使旧令牌无效并生成新令牌 docker swarm join-token --rotate 加入docker swarm集群 docker swarm join --token SWMTKN-1-5d2ipwo8jqdsiesv6ixze20w2toclys76gyu4zdoiaf038voxj-8sbxe79rx5qt14ol14gxxa3wf 192.168.10.117:2377 查看集群中的节点 docker node ls 查看集群中节点信息 docker node inspect docker-117 --pretty 调度程序可以将任务分配给节点 docker node update --availability active docker-118 调度程序不向节点分配新任务,但是现有任务仍然保持运行 docker node update --availability pause docker-118 调度程序不会将新任务分配给节点。调度程序关闭任何现有任务并在可用节点上安排它们 docker node update --availability drain docker-118 添加节点标签 docker node update --label-add label1 --label-add bar=label2 docker-117 docker node update --label-rm label1 docker-117 将节点升级为manager docker node promote docker-118 将节点降级为worker docker node demote docker-118 查看服务列表 docker service ls 查看服务的具体信息 docker service ps redis 创建一个不定义name,不定义replicas的服务 docker service create nginx 创建一个指定name的服务 docker service create --name my_web nginx 创建一个指定name、run cmd的服务 docker service create --name helloworld alping ping docker.com 创建一个指定name、version、run cmd的服务 docker service create --name helloworld alping:3.6 ping docker.com 创建一个指定name、port、replicas的服务 docker service create --name my_web --replicas 3 -p 80:80 nginx 为指定的服务更新一个端口 docker service update --publish-add 80:80 my_web 为指定的服务删除一个端口 docker service update --publish-rm 80:80 my_web 将redis:3.0.6更新至redis:3.0.7 docker service update --image redis:3.0.7 redis 配置运行环境,指定工作目录及环境变量 docker service create --name helloworld --env MYVAR=myvalue --workdir /tmp --user my_user alping ping docker.com 创建一个helloworld的服务 docker service create --name helloworld alpine ping docker.com 更新helloworld服务的运行命令 docker service update --args “ping www.baidu.com” helloworld 删除一个服务 docker service rm my_web 在每个群组节点上运行web服务 docker service create --name tomcat --mode global --publish mode=host,target=8080,published=8080 tomcat:latest 创建一个overlay网络 docker network create --driver overlay my_network docker network create --driver overlay --subnet 10.10.10.0/24 --gateway 10.10.10.1 my-network 创建服务并将网络添加至该服务 docker service create --name test --replicas 3 --network my-network redis 删除群组网络 docker service update --network-rm my-network test 更新群组网络 docker service update --network-add my_network test 创建群组并配置cpu和内存 docker service create --name my_nginx --reserve-cpu 2 --reserve-memory 512m --replicas 3 nginx 更改所分配的cpu和内存 docker service update --reserve-cpu 1 --reserve-memory 256m my_nginx 指定每次更新的容器数量 --update-parallelism 指定容器更新的间隔 --update-delay 定义容器启动后监控失败的持续时间 --update-monitor 定义容器失败的百分比 --update-max-failure-ratio 定义容器启动失败之后所执行的动作 --update-failure-action 创建一个服务并运行3个副本,同步延迟10秒,10%任务失败则暂停 docker service create --name mysql_5_6_36 --replicas 3 --update-delay 10s --update-parallelism 1 --update-monitor 30s --update-failure-action pause --update-max-failure-ratio 0.1 -e MYSQL_ROOT_PASSWORD=123456 mysql:5.6.36 回滚至之前版本 docker service update --rollback mysql 自动回滚 docker service create --name redis --replicas 6 --rollback-parallelism 2 --rollback-monitor 20s --rollback-max-failure-ratio .2 redis:latest 创建服务并将目录挂在至container中 docker service create --name mysql --publish 3306:3306 --mount type=bind,src=/data/mysql,dst=/var/lib/mysql --replicas 3 -e MYSQL_ROOT_PASSWORD=123456 mysql:5.6.36 查看配置 docker config ls 查看配置详细信息 docker config inspect mysql 删除配置 docker config rm mysql ### portainer ### docker volume create portainer_data docker service create \ --name portainer \ --publish 9000:9000 \ --replicas=1 \ --constraint 'node.role == manager' \ --mount type=bind,src=//var/run/docker.sock,dst=/var/run/docker.sock \ --mount type=volume,src=portainer_data,dst=/data \ portainer/portainer \ -H unix:///var/run/docker.sock ### gitlab ### docker volume create --name gitlab_config docker volume create --name gitlab_logs docker volume create --name gitlab_data docker service create --name swarm_gitlab\ --publish 5002:443 --publish 5003:80 --publish 5004:22 \ --replicas 1 \ --mount type=volume,source=gitlab_config,destination=/etc/gitlab \ --mount type=volume,source=gitlab_logs,destination=/var/log/gitlab \ --mount type=volume,source=gitlab_data,destination=/var/opt/gitlab \ --constraint 'node.labels.type == gitlab_node' \ gitlab/gitlab-ce:latest ### mysql ### mysql: image: mysql:5.6.40 environment: # 设置时区为Asia/Shanghai - TZ=Asia/Shanghai - MYSQL_ROOT_PASSWORD=admin@1234 volumes: - mysql:/var/lib/mysql deploy: replicas: 1 restart_policy: condition: any resources: limits: cpus: "0.2" memory: 512M update_config: parallelism: 1 # 每次更新1个副本 delay: 5s # 每次更新间隔 monitor: 10s # 单次更新多长时间后没有结束则判定更新失败 max_failure_ratio: 0.1 # 更新时能容忍的最大失败率 order: start-first # 更新顺序为新任务启动优先 ports: - 3306:3306 networks: - myswarm-net networks: myswarm-net: external: true version: "3.2" services: web: image: 'gitlab/gitlab-ce:latest' restart: always environment: GITLAB_OMNIBUS_CONFIG: | external_url 'http://40.73.96.111:9030' ports: - '9030:80' - '9031:443' - '9032:22' volumes: - '/var/lib/docker/volumes/gitlab_config/_data:/etc/gitlab' - '/var/lib/docker/volumes/gitlab_logs/_data:/var/log/gitlab' - '/var/lib/docker/volumes/gitlab_data/_data:/var/opt/gitlab' # 配置http协议所使用的访问地址 external_url 'http://40.73.96.111:9030' # 配置ssh协议所使用的访问地址和端口 gitlab_rails['gitlab_ssh_host'] = '40.73.96.111' gitlab_rails['gitlab_shell_ssh_port'] = 9032 nginx['listen_port'] = 80 # 这里以新浪的邮箱为例配置smtp服务器 gitlab_rails['smtp_enable'] = true gitlab_rails['smtp_address'] = "smtp.sina.com" gitlab_rails['smtp_port'] = 25 gitlab_rails['smtp_user_name'] = "name4mail" gitlab_rails['smtp_password'] = "passwd4mail" gitlab_rails['smtp_domain'] = "sina.com" gitlab_rails['smtp_authentication'] = :login gitlab_rails['smtp_enable_starttls_auto'] = true # 还有个需要注意的地方是指定发送邮件所用的邮箱,这个要和上面配置的邮箱一致 gitlab_rails['gitlab_email_from'] = 'name4mail@sina.com' $ curl -L https://portainer.io/download/portainer-agent-stack.yml -o portainer-agent-stack.yml $ docker stack deploy --compose-file=portainer-agent-stack.yml portainer //remote use mysql; select host, user, authentication_string, plugin from user; GRANT ALL ON *.* TO 'root'@'%'; flush privileges; //mysql8 ALTER USER 'root'@'localhost' IDENTIFIED BY 'admin@1234' PASSWORD EXPIRE NEVER; ALTER USER 'root'@'%' IDENTIFIED WITH mysql_native_password BY 'admin@1234'; FLUSH PRIVILEGES; ace-center/target/ace-center.jar ace-center/target/ docker rm -f ace-center sleep 1 docker service create --name ace-center --publish 6010:8761 --replicas 1 -e JAR_PATH=/tmp/ace-center.jar dhso/springboot-app:1.0 FROM java:8 VOLUME /tmp ADD ace-center/target/ace-center.jar app.jar RUN bash -c 'touch /app.jar' ENTRYPOINT ["java","-Djava.security.egd=file:/dev/./urandom","-jar","/app.jar"] docker rm -f ace-center sleep 1 docker rmi -f dhso/ace-center sleep 1 cd /tmp/ace-center docker build -t dhso/ace-center . sleep 1 docker service create --name ace-center --publish 6010:8761 --replicas 1 dhso/ace-center docker service create --name ace-center --publish 6010:8080 --replicas 1 -e JAR_PATH=/tmp/ace-center.jar dhso/springboot-app:1.0 ## ace-center target/ace-center.jar,src/main/docker/Dockerfile ace-center docker service rm ace-center sleep 1s docker rm -f ace-center sleep 1s docker images|grep 172.16.5.5:9060/ace-center|awk '{print $3}'|xargs docker rmi -f sleep 1s cd /tmp/ace-center rm -rf docker mkdir docker cp target/ace-center.jar docker/ace-center.jar cp src/main/docker/Dockerfile docker/Dockerfile cd docker docker build -t 172.16.5.5:9060/ace-center:latest . sleep 1s docker push 172.16.5.5:9060/ace-center:latest sleep 1s docker network create --driver overlay --subnet 10.222.0.0/16 ace_network sleep 1s docker service create --name ace-center --network ace_network --constraint 'node.labels.type == worker' --publish 6010:8761 --replicas 1 172.16.5.5:9060/ace-center:latest ### ace-config ### target/ace-config.jar,src/main/docker/Dockerfile ace-config docker service rm ace-config sleep 1s docker rm -f ace-config sleep 1s docker images|grep 172.16.5.5:9060/ace-config|awk '{print $3}'|xargs docker rmi -f sleep 1s cd /tmp/ace-config rm -rf docker mkdir docker cp target/ace-config.jar docker/ace-config.jar cp src/main/docker/Dockerfile docker/Dockerfile cd docker docker build -t 172.16.5.5:9060/ace-config:latest . sleep 1s docker push 172.16.5.5:9060/ace-config:latest sleep 1s docker network create --driver overlay --subnet 10.222.0.0/16 ace_network sleep 1s docker service create --name ace-config --network ace_network --constraint 'node.labels.type == worker' --publish 6011:8750 --replicas 1 172.16.5.5:9060/ace-config:latest ### ace-auth ### target/ace-auth.jar,src/main/docker/Dockerfile ace-auth docker service rm ace-auth sleep 1s docker rm -f ace-auth sleep 1s docker images|grep 172.16.5.5:9060/ace-auth|awk '{print $3}'|xargs docker rmi -f sleep 1s cd /tmp/ace-auth rm -rf docker mkdir docker cp target/ace-auth.jar docker/ace-auth.jar cp src/main/docker/Dockerfile docker/Dockerfile cd docker docker build -t 172.16.5.5:9060/ace-auth:latest . sleep 1s docker push 172.16.5.5:9060/ace-auth:latest sleep 1s docker network create --driver overlay --subnet 10.222.0.0/16 ace_network sleep 1s docker service create --name ace-auth --network ace_network --constraint 'node.labels.type == worker' --publish 6013:9777 --replicas 1 172.16.5.5:9060/ace-auth:latest ### ace-admin ### target/ace-admin.jar,src/main/docker/Dockerfile,src/main/docker/wait-for-it.sh ace-admin docker service rm ace-admin sleep 1s docker rm -f ace-admin sleep 1s docker images|grep 172.16.5.5:9060/ace-admin|awk '{print $3}'|xargs docker rmi -f sleep 1s cd /tmp/ace-admin rm -rf docker mkdir docker cp target/ace-admin.jar docker/ace-admin.jar cp src/main/docker/Dockerfile docker/Dockerfile cp src/main/docker/wait-for-it.sh docker/wait-for-it.sh cd docker docker build -t 172.16.5.5:9060/ace-admin:latest . sleep 1s docker push 172.16.5.5:9060/ace-admin:latest sleep 1s docker network create --driver overlay --subnet 10.222.0.0/16 ace_network sleep 1s docker service create --name ace-admin --network ace_network --constraint 'node.labels.type == worker' --publish 6014:8762 --replicas 1 172.16.5.5:9060/ace-admin:latest ### ace-gate ### target/ace-gate.jar,src/main/docker/Dockerfile,src/main/docker/wait-for-it.sh ace-gate docker service rm ace-gate sleep 1s docker rm -f ace-gate sleep 1s docker images|grep 172.16.5.5:9060/ace-gate|awk '{print $3}'|xargs docker rmi -f sleep 1s cd /tmp/ace-gate rm -rf docker mkdir docker cp target/ace-gate.jar docker/ace-gate.jar cp src/main/docker/Dockerfile docker/Dockerfile cp src/main/docker/wait-for-it.sh docker/wait-for-it.sh cd docker docker build -t 172.16.5.5:9060/ace-gate:latest . sleep 1s docker push 172.16.5.5:9060/ace-gate:latest sleep 1s docker network create --driver overlay --subnet 10.222.0.0/16 ace_network sleep 1s docker service create --name ace-gate --network ace_network --constraint 'node.labels.type == worker' --publish 6015:8765 --replicas 1 172.16.5.5:9060/ace-gate:latest ### ace-dict ### target/ace-dict.jar,src/main/docker/Dockerfile,src/main/docker/wait-for-it.sh ace-dict docker service rm ace-dict sleep 1s docker rm -f ace-dict sleep 1s docker images|grep 172.16.5.5:9060/ace-dict|awk '{print $3}'|xargs docker rmi -f sleep 1s cd /tmp/ace-dict rm -rf docker mkdir docker cp target/ace-dict.jar docker/ace-dict.jar cp src/main/docker/Dockerfile docker/Dockerfile cp src/main/docker/wait-for-it.sh docker/wait-for-it.sh cd docker docker build -t 172.16.5.5:9060/ace-dict:latest . sleep 1s docker push 172.16.5.5:9060/ace-dict:latest sleep 1s docker network create --driver overlay --subnet 10.222.0.0/16 ace_network sleep 1s docker service create --name ace-dict --network ace_network --constraint 'node.labels.type == worker' --publish 6016:9999 --replicas 1 172.16.5.5:9060/ace-dict:latest ### ace-ui ### FROM node:8-alpine run mkdir webapp add . ./webapp run npm config set registry https://registry.npm.taobao.org run npm install -g http-server WORKDIR ./webapp cmd http-server -p 9527 EXPOSE 9527 ========== dist/*,Dockerfile ace-ui docker service rm ace-ui sleep 1s docker rm -f ace-ui sleep 1s docker images|grep 172.16.5.5:9060/ace-ui|awk '{print $3}'|xargs docker rmi -f sleep 1s cd /tmp/ace-ui cp Dockerfile dist/Dockerfile cd dist docker build -t 172.16.5.5:9060/ace-ui:latest . sleep 1s docker push 172.16.5.5:9060/ace-ui:latest sleep 1s docker network create --driver overlay --subnet 10.222.0.0/16 ace_network sleep 1s docker service create --name ace-ui --network ace_network --constraint 'node.labels.type == worker' --publish 6012:9527 --replicas 1 172.16.5.5:9060/ace-ui:latest ### ace-monitor ### target/ace-monitor.jar,src/main/docker/Dockerfile ace-monitor docker service rm ace-monitor sleep 1s docker rm -f ace-monitor sleep 1s docker images|grep 172.16.5.5:9060/ace-monitor|awk '{print $3}'|xargs docker rmi -f sleep 1s cd /tmp/ace-monitor rm -rf docker mkdir docker cp target/ace-monitor.jar docker/ace-monitor.jar cp src/main/docker/Dockerfile docker/Dockerfile cd docker docker build -t 172.16.5.5:9060/ace-monitor:latest . sleep 1s docker push 172.16.5.5:9060/ace-monitor:latest sleep 1s docker network create --driver overlay --subnet 10.222.0.0/16 ace_network sleep 1s docker service create --name ace-monitor --network ace_network --constraint 'node.labels.type == worker' --publish 6017:8764 --replicas 1 172.16.5.5:9060/ace-monitor:latest ### ace-trace ### target/ace-trace.jar,src/main/docker/Dockerfile ace-trace docker service rm ace-trace sleep 1s docker rm -f ace-trace sleep 1s docker images|grep 172.16.5.5:9060/ace-trace|awk '{print $3}'|xargs docker rmi -f sleep 1s cd /tmp/ace-trace rm -rf docker mkdir docker cp target/ace-trace.jar docker/ace-trace.jar cp src/main/docker/Dockerfile docker/Dockerfile cd docker docker build -t 172.16.5.5:9060/ace-trace:latest . sleep 1s docker push 172.16.5.5:9060/ace-trace:latest sleep 1s docker network create --driver overlay --subnet 10.222.0.0/16 ace_network sleep 1s docker service create --name ace-trace --network ace_network --constraint 'node.labels.type == worker' --publish 6018:9411 --replicas 1 172.16.5.5:9060/ace-trace:latest docker service create --name redis_01 --mount type=volume,src=redis_data,dst=/data \ --network ace_network --constraint 'node.labels.type == manager' --publish 9050:6379 --replicas 1 redis:latest docker service create --name mysql_01 --mount type=volume,src=mysql_data,dst=/var/lib/mysql \ --env MYSQL_ROOT_PASSWORD=admin@1234 --network ace_network \ --constraint 'node.labels.type == manager' --publish 9051:3306 --replicas 1 mysql:5.6 /usr/bin/mysqladmin -u root password 'admin@1234' docker service create --name rabbitmq_01 --mount type=volume,src=rabbitmq,dst=/var/lib/rabbitmq \ --network ace_network --constraint 'node.labels.type == manager' \ --publish 9052:5671 --publish 9053:5672 --publish 9054:15672 --replicas 1 rabbitmq:latest FROM node:8-alpine run mkdir webapp add . ./webapp run npm config set registry https://registry.npm.taobao.org run npm install -g http-server WORKDIR ./webapp cmd http-server -p 9527 EXPOSE 9527 yum install -y epel-release yum install -y htop

-

![在docker上部署mongodb分布式分片副本集群]() 在docker上部署mongodb分布式分片副本集群 使用 Sharded cluster 时,通常是要解决如下2个问题 存储容量受单机限制,即磁盘资源遭遇瓶颈。 读写能力受单机限制(读能力也可以在复制集里加 secondary 节点来扩展),可能是 CPU、内存或者网卡等资源遭遇瓶颈,导致读写能力无法扩展。 Sharded模式图创建3个配置服务(configsvr)docker run -d -p 40001:40001 --privileged=true -v cnf40001:/data/db --name cnf_c1 mongo:latest --configsvr --port 40001 --dbpath /data/db --replSet crsdocker run -d -p 40002:40002 --privileged=true -v cnf40002:/data/db --name cnf_c2 mongo:latest --configsvr --port 40002 --dbpath /data/db --replSet crsdocker run -d -p 40003:40003 --privileged=true -v cnf40003:/data/db --name cnf_c3 mongo:latest --configsvr --port 40003 --dbpath /data/db --replSet crs任意选择crs分片的一个副本mongo --port 40001切换数据库use admin写配置文件config = {_id:"crs", configsvr:true, members:[ {_id:0,host:"192.168.31.82:40001"}, {_id:1,host:"192.168.31.82:40002"}, {_id:2,host:"192.168.31.82:40003"} ] }初始化副本集配置rs.initiate(config)如果已经初始化过,使用下面的强制配置rs.reconfig(config,{force:true})查看副本集状态rs.status()创建2个分片服务(shardsvr),每个shardsvr包含3个副本,其中1个主节点,1个从节点,1个仲裁节点。docker run -d -p 20001:20001 --privileged=true -v db20001:/data/db --name rs1_c1 mongo:latest --shardsvr --port 20001 --dbpath /data/db --replSet rs1docker run -d -p 20002:20002 --privileged=true -v db20002:/data/db --name rs1_c2 mongo:latest --shardsvr --port 20002 --dbpath /data/db --replSet rs1docker run -d -p 20003:20003 --privileged=true -v db20003:/data/db --name rs1_c3 mongo:latest --shardsvr --port 20003 --dbpath /data/db --replSet rs1任意选择rs1分片的一个副本mongo --port 20001切换数据库use admin写配置文件config = {_id:"rs1",members:[ {_id:0,host:"192.168.31.82:20001"}, {_id:1,host:"192.168.31.82:20002"}, {_id:2,host:"192.168.31.82:20003",arbiterOnly:true} ] }初始化副本集配置rs.initiate(config)如果已经初始化过,使用下面的强制配置rs.reconfig(config,{force:true})查看副本集状态rs.status()结果rs1:RECOVERING> rs.status() { "set" : "rs1", "date" : ISODate("2016-12-20T09:01:16.108Z"), "myState" : 1, "term" : NumberLong(1), "heartbeatIntervalMillis" : NumberLong(2000), "members" : [ { "_id" : 0, "name" : "192.168.31.82:20001", "health" : 1, "state" : 1, "stateStr" : "PRIMARY", "uptime" : 7799, "optime" : { "ts" : Timestamp(1482224415, 1), "t" : NumberLong(1) }, "optimeDate" : ISODate("2016-12-20T09:00:15Z"), "infoMessage" : "could not find member to sync from", "electionTime" : Timestamp(1482224414, 1), "electionDate" : ISODate("2016-12-20T09:00:14Z"), "configVersion" : 1, "self" : true }, { "_id" : 1, "name" : "192.168.31.82:20002", "health" : 1, "state" : 2, "stateStr" : "SECONDARY", "uptime" : 71, "optime" : { "ts" : Timestamp(1482224415, 1), "t" : NumberLong(1) }, "optimeDate" : ISODate("2016-12-20T09:00:15Z"), "lastHeartbeat" : ISODate("2016-12-20T09:01:15.016Z"), "lastHeartbeatRecv" : ISODate("2016-12-20T09:01:15.376Z"), "pingMs" : NumberLong(1), "syncingTo" : "192.168.30.200:20001", "configVersion" : 1 }, { "_id" : 2, "name" : "192.168.31.82:20003", "health" : 1, "state" : 7, "stateStr" : "ARBITER", "uptime" : 71, "lastHeartbeat" : ISODate("2016-12-20T09:01:15.016Z"), "lastHeartbeatRecv" : ISODate("2016-12-20T09:01:11.334Z"), "pingMs" : NumberLong(0), "configVersion" : 1 } ], "ok" : 1 } 创建2个分片服务(shardsvr),每个shardsvr包含3个副本,其中1个主节点,1个从节点,1个仲裁节点。docker run -d -p 30001:30001 --privileged=true -v db30001:/data/db --name rs2_c1 mongo:latest --shardsvr --port 30001 --dbpath /data/db --replSet rs2docker run -d -p 30002:30002 --privileged=true -v db30002:/data/db --name rs2_c2 mongo:latest --shardsvr --port 30002 --dbpath /data/db --replSet rs2docker run -d -p 30003:30003 --privileged=true -v db30003:/data/db --name rs2_c3 mongo:latest --shardsvr --port 30003 --dbpath /data/db --replSet rs2任意选择rs2分片的一个副本mongo --port 30001切换数据库use admin写配置文件config = {_id:"rs2",members:[ {_id:0,host:"192.168.31.82:30001"}, {_id:1,host:"192.168.31.82:30002"}, {_id:2,host:"192.168.31.82:30003",arbiterOnly:true} ] }初始化副本集配置rs.initiate(config)如果已经初始化过,使用下面的强制配置rs.reconfig(config,{force:true})查看副本集状态rs.status()创建2个路由服务(mongos)docker run -d -p 50001:50001 --privileged=true --name ctr50001 mongo:latest mongos --configdb crs/192.168.31.82:40001,192.168.31.82:40002,192.168.31.82:40003 --port 50001 --bind_ip 0.0.0.0docker run -d -p 50002:50002 --privileged=true --name ctr50002 mongo:latest mongos --configdb crs/192.168.31.82:40001,192.168.31.82:40002,192.168.31.82:40003 --port 50002 --bind_ip 0.0.0.0通过mongos添加分片关系到configsvr。选择路由服务mongo --port 50001切换数据库use admin添加sharddb.runCommand({addshard:"rs1/192.168.31.82:20001,192.168.31.82:20002,192.168.31.82:20003"})db.runCommand({addshard:"rs2/192.168.31.82:30001,192.168.31.82:30002,192.168.31.82:30003"})查询结果 [仲裁节点不显示]db.runCommand({listshards:1}){ "shards" : [ { "_id" : "rs1", "host" : "rs1/192.168.31.82:20001,192.168.31.82:20002" }, { "_id" : "rs2", "host" : "rs2/192.168.31.82:30001,192.168.31.82:30002" } ], "ok" : 1 } 测试示例设置数据库、集合分片 [说明:并不是数据库中所有集合都分片,只有设置了shardcollection才分片,因为不是所有的集合都需要分片。]db.runCommand({enablesharding:"mydb"}) db.runCommand({shardcollection:"mydb.person", key:{id:1, company:1}})测试分片use mydb for (i =0; i<10000;i++){ db.person.save({id:i, company:"baidu"})}测试结果db.person.stats()

在docker上部署mongodb分布式分片副本集群 使用 Sharded cluster 时,通常是要解决如下2个问题 存储容量受单机限制,即磁盘资源遭遇瓶颈。 读写能力受单机限制(读能力也可以在复制集里加 secondary 节点来扩展),可能是 CPU、内存或者网卡等资源遭遇瓶颈,导致读写能力无法扩展。 Sharded模式图创建3个配置服务(configsvr)docker run -d -p 40001:40001 --privileged=true -v cnf40001:/data/db --name cnf_c1 mongo:latest --configsvr --port 40001 --dbpath /data/db --replSet crsdocker run -d -p 40002:40002 --privileged=true -v cnf40002:/data/db --name cnf_c2 mongo:latest --configsvr --port 40002 --dbpath /data/db --replSet crsdocker run -d -p 40003:40003 --privileged=true -v cnf40003:/data/db --name cnf_c3 mongo:latest --configsvr --port 40003 --dbpath /data/db --replSet crs任意选择crs分片的一个副本mongo --port 40001切换数据库use admin写配置文件config = {_id:"crs", configsvr:true, members:[ {_id:0,host:"192.168.31.82:40001"}, {_id:1,host:"192.168.31.82:40002"}, {_id:2,host:"192.168.31.82:40003"} ] }初始化副本集配置rs.initiate(config)如果已经初始化过,使用下面的强制配置rs.reconfig(config,{force:true})查看副本集状态rs.status()创建2个分片服务(shardsvr),每个shardsvr包含3个副本,其中1个主节点,1个从节点,1个仲裁节点。docker run -d -p 20001:20001 --privileged=true -v db20001:/data/db --name rs1_c1 mongo:latest --shardsvr --port 20001 --dbpath /data/db --replSet rs1docker run -d -p 20002:20002 --privileged=true -v db20002:/data/db --name rs1_c2 mongo:latest --shardsvr --port 20002 --dbpath /data/db --replSet rs1docker run -d -p 20003:20003 --privileged=true -v db20003:/data/db --name rs1_c3 mongo:latest --shardsvr --port 20003 --dbpath /data/db --replSet rs1任意选择rs1分片的一个副本mongo --port 20001切换数据库use admin写配置文件config = {_id:"rs1",members:[ {_id:0,host:"192.168.31.82:20001"}, {_id:1,host:"192.168.31.82:20002"}, {_id:2,host:"192.168.31.82:20003",arbiterOnly:true} ] }初始化副本集配置rs.initiate(config)如果已经初始化过,使用下面的强制配置rs.reconfig(config,{force:true})查看副本集状态rs.status()结果rs1:RECOVERING> rs.status() { "set" : "rs1", "date" : ISODate("2016-12-20T09:01:16.108Z"), "myState" : 1, "term" : NumberLong(1), "heartbeatIntervalMillis" : NumberLong(2000), "members" : [ { "_id" : 0, "name" : "192.168.31.82:20001", "health" : 1, "state" : 1, "stateStr" : "PRIMARY", "uptime" : 7799, "optime" : { "ts" : Timestamp(1482224415, 1), "t" : NumberLong(1) }, "optimeDate" : ISODate("2016-12-20T09:00:15Z"), "infoMessage" : "could not find member to sync from", "electionTime" : Timestamp(1482224414, 1), "electionDate" : ISODate("2016-12-20T09:00:14Z"), "configVersion" : 1, "self" : true }, { "_id" : 1, "name" : "192.168.31.82:20002", "health" : 1, "state" : 2, "stateStr" : "SECONDARY", "uptime" : 71, "optime" : { "ts" : Timestamp(1482224415, 1), "t" : NumberLong(1) }, "optimeDate" : ISODate("2016-12-20T09:00:15Z"), "lastHeartbeat" : ISODate("2016-12-20T09:01:15.016Z"), "lastHeartbeatRecv" : ISODate("2016-12-20T09:01:15.376Z"), "pingMs" : NumberLong(1), "syncingTo" : "192.168.30.200:20001", "configVersion" : 1 }, { "_id" : 2, "name" : "192.168.31.82:20003", "health" : 1, "state" : 7, "stateStr" : "ARBITER", "uptime" : 71, "lastHeartbeat" : ISODate("2016-12-20T09:01:15.016Z"), "lastHeartbeatRecv" : ISODate("2016-12-20T09:01:11.334Z"), "pingMs" : NumberLong(0), "configVersion" : 1 } ], "ok" : 1 } 创建2个分片服务(shardsvr),每个shardsvr包含3个副本,其中1个主节点,1个从节点,1个仲裁节点。docker run -d -p 30001:30001 --privileged=true -v db30001:/data/db --name rs2_c1 mongo:latest --shardsvr --port 30001 --dbpath /data/db --replSet rs2docker run -d -p 30002:30002 --privileged=true -v db30002:/data/db --name rs2_c2 mongo:latest --shardsvr --port 30002 --dbpath /data/db --replSet rs2docker run -d -p 30003:30003 --privileged=true -v db30003:/data/db --name rs2_c3 mongo:latest --shardsvr --port 30003 --dbpath /data/db --replSet rs2任意选择rs2分片的一个副本mongo --port 30001切换数据库use admin写配置文件config = {_id:"rs2",members:[ {_id:0,host:"192.168.31.82:30001"}, {_id:1,host:"192.168.31.82:30002"}, {_id:2,host:"192.168.31.82:30003",arbiterOnly:true} ] }初始化副本集配置rs.initiate(config)如果已经初始化过,使用下面的强制配置rs.reconfig(config,{force:true})查看副本集状态rs.status()创建2个路由服务(mongos)docker run -d -p 50001:50001 --privileged=true --name ctr50001 mongo:latest mongos --configdb crs/192.168.31.82:40001,192.168.31.82:40002,192.168.31.82:40003 --port 50001 --bind_ip 0.0.0.0docker run -d -p 50002:50002 --privileged=true --name ctr50002 mongo:latest mongos --configdb crs/192.168.31.82:40001,192.168.31.82:40002,192.168.31.82:40003 --port 50002 --bind_ip 0.0.0.0通过mongos添加分片关系到configsvr。选择路由服务mongo --port 50001切换数据库use admin添加sharddb.runCommand({addshard:"rs1/192.168.31.82:20001,192.168.31.82:20002,192.168.31.82:20003"})db.runCommand({addshard:"rs2/192.168.31.82:30001,192.168.31.82:30002,192.168.31.82:30003"})查询结果 [仲裁节点不显示]db.runCommand({listshards:1}){ "shards" : [ { "_id" : "rs1", "host" : "rs1/192.168.31.82:20001,192.168.31.82:20002" }, { "_id" : "rs2", "host" : "rs2/192.168.31.82:30001,192.168.31.82:30002" } ], "ok" : 1 } 测试示例设置数据库、集合分片 [说明:并不是数据库中所有集合都分片,只有设置了shardcollection才分片,因为不是所有的集合都需要分片。]db.runCommand({enablesharding:"mydb"}) db.runCommand({shardcollection:"mydb.person", key:{id:1, company:1}})测试分片use mydb for (i =0; i<10000;i++){ db.person.save({id:i, company:"baidu"})}测试结果db.person.stats() -

一步一步搭建docker+hadoop平台(3) 启动hadoop容器docker run -itd --net=hadoop -p 50070:50070 -p 8088:8088 -p 8020:8020 --name hadoop-master --hostname hadoop-master -v /data:/mnt kiwenlau/hadoop:1.0 进入hadoop容器docker exec -it hadoop-master bash 停止hadoop再启动$HADOOP_HOME/sbin/stop-all.sh $HADOOP_HOME/sbin/start-all.sh

-

一步一步搭建docker+hadoop平台(1) 下载系统文件去centos官网http://101.96.10.38/isoredirect.centos.org/centos/7/isos/x86_64/CentOS-7-x86_64-Minimal-1511.iso下载最小版本的ios文件,并使用UltraISO制作U盘启动盘。制作启动盘使用UltraISO软件制作 文件 > 打开 > 选择iso文件 启动 > 写入硬盘镜像 弹窗 写入方式选择为“USB-HDD+” > 写入 > OK 安装系统 使用U盘启动,启动界面下按上键使第一项install centos7高亮. 看屏幕下方的提示,使用e键或者b键修改启动方式 将 vmlinuz initrd=initrd.img inst.stage2=hd:LABEL=CentOS\x207\x20x86_64 quiet 改为 vmlinuz initrd=initrd.img inst.stage2=hd:/dev/sdb4 quiet 按下ctrl+x键进入安装图形界面 *在分配磁盘的时候,建议不要使用LVM模式,而使用标准模式 配置网络vim /etc/sysconfig/network-scripts/ifcfg-eth0 //有可能是其他的网卡名 编辑内容BOOTPROTO="static" #dhcp改为static ONBOOT="yes" #开机启用本配置 IPADDR=192.168.1.171 #静态IP GATEWAY=192.168.1.1 #默认网关 NETMASK=255.255.255.0 #子网掩码 DNS1=192.168.1.1 #DNS 配置 文件内容HWADDR="00:15:5D:07:F1:02" TYPE="Ethernet" BOOTPROTO="static" #dhcp改为static DEFROUTE="yes" PEERDNS="yes" PEERROUTES="yes" IPV4_FAILURE_FATAL="no" IPV6INIT="yes" IPV6_AUTOCONF="yes" IPV6_DEFROUTE="yes" IPV6_PEERDNS="yes" IPV6_PEERROUTES="yes" IPV6_FAILURE_FATAL="no" NAME="eth0" UUID="bb3a302d-dc46-461a-881e-d46cafd0eb71" ONBOOT="yes" #开机启用本配置 IPADDR=192.168.7.106 #静态IP GATEWAY=192.168.7.1 #默认网关 NETMASK=255.255.255.0 #子网掩码 DNS1=192.168.7.1 #DNS 配置 重启网卡服务service network restart 关机shutdown now # 立即关机 shutdown +2 # 2 min 后关机 shutdown 10:01 # 10:01关机 shutdown +2 "The machine will shutdown" # 2min 后关机,并通知在线者

-

docker+shipyard+hadoop部署方案 相关链接shipyard官网https://shipyard-project.com/docs/deploy/automated/tuicool文章http://www.tuicool.com/articles/FnmeuuNsegmentfault文章https://segmentfault.com/a/1190000002464365github--hadoop-cluster-dockerhttps://github.com/kiwenlau/hadoop-cluster-dockerkiwenlau的博客http://kiwenlau.com/2016/06/12/160612-hadoop-cluster-docker-update/